3D Photography

using Context-aware Layered Depth Inpainting

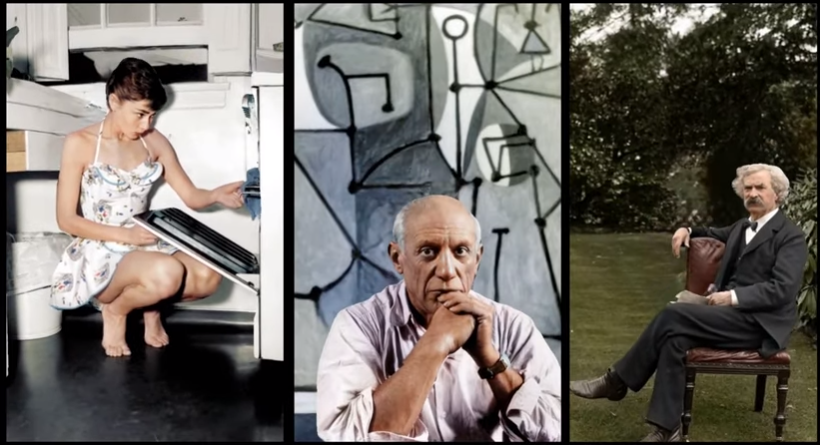

An interesting approach for converting a single RGB-D input image into a 3D photo, i.e., a multi-layer representation for novel view synthesis that contains hallucinated color and depth structures in regions occluded in the original view. Using a Layered Depth Image with explicit pixel connectivity as underlying representation, a learning-based inpainting model is presented that iteratively synthesizes new local color-and-depth content into the occluded region in a spatial context-aware manner.