Deep Face generator

Deep Learning based Generation of Face Images from Sketches

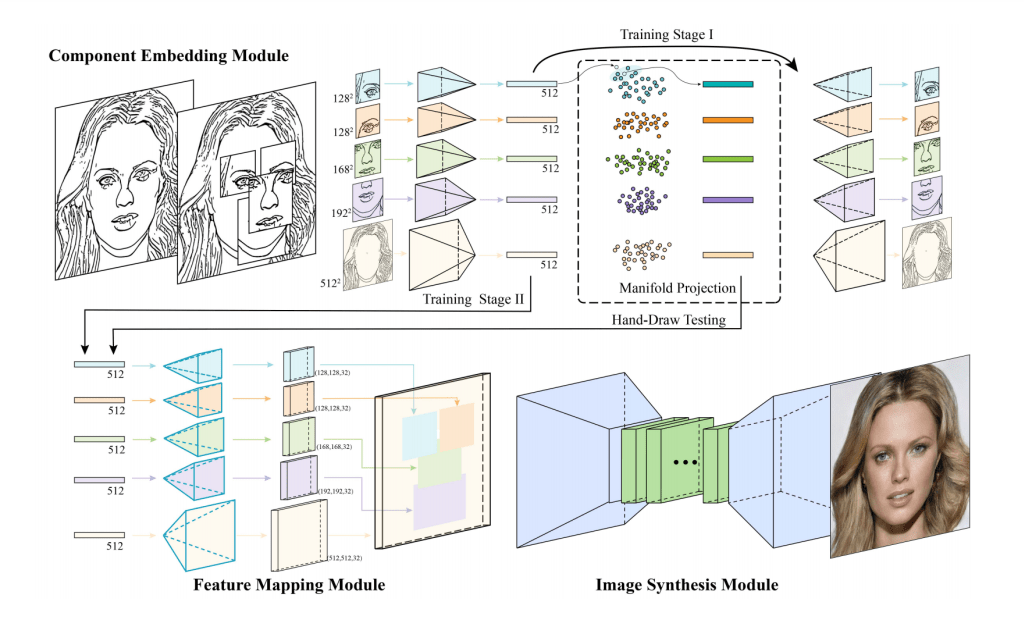

A team of researchers from the Chinese Academy of Sciences and the City University of Hong Kong presented a novel deep learning framework for synthesizing realistic face images from rough and/or incomplete freehand sketches.

The system takes a local-to-global approach by first decomposing a sketched face into components, refining its individual components by projecting them to component manifolds defined by the existing component samples in the feature spaces, mapping the refined feature vectors to the feature maps for spatial combination, and finally translating the combined feature maps to realistic images. This approach naturally supports local editing and makes the involved network easy to train from a training dataset of not very large scale.

Deep learning uses sketch-to-image translation usually takes ‘hard’ constraints or fixed sketches then try to reconstruct the missing texture/pattern or shading information between line strokes, in this new approach that implicitly learn a space of plausible face sketches from real face sketch images and find the point in this space that best approximates the input sketch.

The system consists of:

- CE (Component Embedding),

- FM (Feature Mapping), and

- IS (Image Synthesis).

Face Morphing

This framework follows a simple but effective morphing approach by:

1) decomposing a pair of source and target face sketches in the training dataset into five components;

2) encoding the component sketches as feature vectors in the corresponding feature spaces;

3) performing linear interpolation between the source and target feature vectors for the corresponding components;

4) finally feeding the interpolated feature vectors to the FM and IS module to get intermediate face images.

Reference

DeepFaceDrawing: Deep Generation of Face Images from Sketches [ Article pdf]